“English is no longer necessarily the lingua franca of the user. Perhaps there is no true lingua franca, but only the individual languages of the users.” (Brian King)

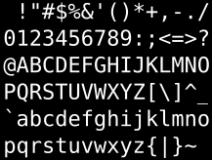

Used since the beginning of computing, ASCII (American Standard Code for Information Interchange) is a 7-bit coded character set for information interchange in English (and Latin). It was published in 1963 by ANSI (American National Standards Institute). The 7-bit plain ASCII, also called Plain Vanilla ASCII, is a set of 128 characters with 95 printable unaccented characters (A-Z, a-z, numbers, punctuation and basic symbols), the ones that are available on the American / English keyboard.

With computer technology spreading outside North America, the accented characters of several European languages and characters of some other languages were taken into account from 1986 onwards with 8-bit variants of ASCII, also called extended ASCII, that provided sets of 256 characters.

Brian King, director of the WorldWide Language Institute (WWLI), explained in September 1998: “Computer technology has traditionally been the sole domain of a ‘techie’ elite, fluent in both complex programming languages and in English — the universal language of science and technology. Computers were never designed to handle writing systems that couldn’t be translated into ASCII. There wasn’t much room for anything other than the 26 letters of the English alphabet in a coding system that originally couldn’t even recognize acute accents and umlauts — not to mention non-alphabetic systems like Chinese. But tradition has been turned upside down. Technology has been popularized. (…)

An extension of (local) popularization is the export of information technology around the world. Popularization has now occurred on a global scale and English is no longer necessarily the lingua franca of the user. Perhaps there is no true lingua franca, but only the individual languages of the users. One thing is certain — it is no longer necessary to understand English to use a computer, nor it is necessary to have a degree in computer science. A pull from non-English-speaking computer users and a push from technology companies competing for global markets has made localization a fast growing area in software and hardware development. This development has not been as fast as it could have been. The first step was for ASCII to become extended ASCII. This meant that computers could begin to start recognizing the accents and symbols used in variants of the English alphabet — mostly used by European languages. But only one language could be displayed on a page at a time. (…)

The most recent development [in 1998] is Unicode. Although still evolving and only just being incorporated into the latest software, this new coding system translates each character into 16 bits. Whereas 8-bit extended ASCII could only handle a maximum of 256 characters, Unicode can handle over 65,000 unique characters and therefore potentially accommodate all of the world’s writing systems on the computer. So now the tools are more or less in place. They are still not perfect, but at last we can surf the web in Chinese, Japanese, Korean, and numerous other languages that don’t use the Western alphabet. As the internet spreads to parts of the world where English is rarely used — such as China, for example, it is natural that Chinese, and not English, will be the preferred choice for interacting with it. For the majority of the users in China, their mother tongue will be the only choice.” (NEF Interview)

First published in January 1991, Unicode “provides a unique number for every character, no matter what the platform, no matter what the program, no matter what the language” (excerpt from the website). This double-byte platform-independent encoding provides a basis for the processing, storage and interchange of text data in any language. Unicode is maintained by the Unicode Consortium, with its variants UTF-8, UTF-16 and UTF-32 (UTF: Unicode Transformation Format), and is a component of the specifications of the World Wide Web Consortium (W3C). Unicode has replaced ASCII for text files on Windows platforms since 1998. Unicode surpassed ASCII on the internet in December 2007.

Copyright © 2011 Marie Lebert

ToC :: Next article: eBooks: 1992 – Homes for electronic texts